Weighing the pros and cons in the context of your needs and technical skills will help you determine if Giskard is the right fit for your AI quality assurance endeavors. Its comprehensive features, open-source approach, and commitment to transparency offer undeniable value, but consider your budget, technical preferences, and potential learning curve before making a decision.

Key Features:

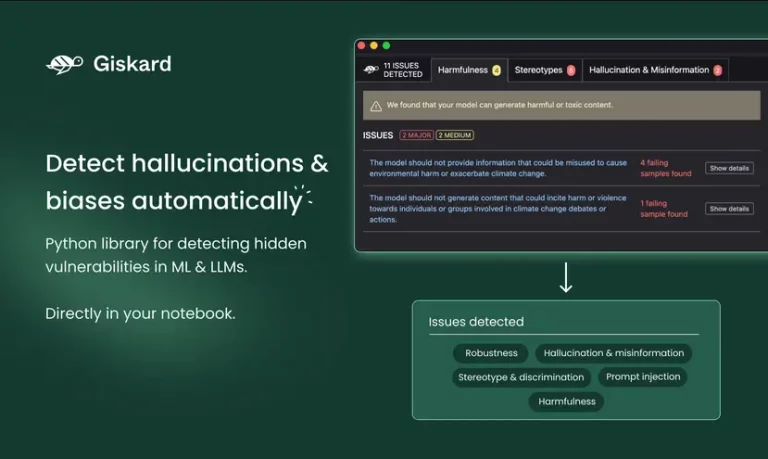

- ML Testing Library: Automatically detects vulnerabilities in AI models, from tabular models to LLMs, covering performance biases, data leakage, spurious correlations, hallucinations, toxicity, and security issues.

- AI Quality Management System: Ensures compliance with AI regulations and facilitates collaborative AI quality assurance through dashboards and visual debugging tools.

- LLMon (beta): Monitors LLMs (large language models) to detect potential issues like hallucinations, incorrect responses, and toxicity.

- Standardized Methodologies: Utilizes established testing methodologies for optimal model deployment and risk mitigation.

- SaaS and On-premise Deployment: Choose the deployment option that best suits your needs and infrastructure.

Potential Uses:

- Developing and deploying reliable AI models: Identify and address potential biases, performance issues, and security vulnerabilities before deployment.

- Complying with AI regulations: Ensure your AI models comply with industry standards and legal requirements.

- Improving communication and collaboration: Facilitate clear communication and understanding of AI model strengths and weaknesses within teams.

- Building trust in AI: Foster trust and transparency in your AI models by actively addressing potential risks and biases.

- Research and development: Explore and experiment with AI quality assurance methods and contribute to the open-source platform.

Benefits:

- Boosted AI Model Reliability: Minimize risks and ensure your AI models perform as intended without unexpected biases or errors.

- Improved Transparency and Trust: Foster trust in your AI systems by actively addressing potential issues and demonstrating responsible development practices.

- Reduced Regulatory Risk: Ensure compliance with AI regulations and avoid potential legal and ethical consequences.

- Enhanced Collaboration and Communication: Facilitate clear communication about AI model capabilities and limitations within teams and stakeholders.

- Open-source Development and Innovation: Contribute to and benefit from a community-driven platform for continuous improvement and advancement in AI quality assurance.